Analytics API¶

Introduction¶

The analytics API service of ORamaVR is built using ASP.NET Core 3.1. similarly to the Login service.

It serves as a web API and relies on the latest technologies offered by Microsoft (e.g., ASP.NET Core, Azure Blob Storage, etc.).

To leverage easiness of deployment, native support, and robust scaling, our platform relies on the Azure Cloud infrastructure services to operate. Therefore, changes to the existing codebase are easily deployable and tested through the well-known development environments of Microsoft and subsidiary products and frameworks (e.g. Visual Studio, Visual Studio Code, etc.).

All analytics data are saved on our cloud system. This system is based on Microsoft Azure Blob Storage System. The structure along with the files themselves are presented in detail in the following sections.

Purpose¶

Analytics API is an independent service from Login. This is mainly due to separation of concerns and reducing the overall complexity by decoupling business operations.

Additionally, Analytics API is computationality expensive since it is responsible for storing, processing, and retrieving user’s gathered analytics from VR modules. Therefore, decoupling the services reduces the overhead from Login which involves mostly CRUD operations.

Moreover, if there is downtime with Analytics API, this does not prevent user’s from accessing your VR modules (i.e., checking out a license).

Omitted information¶

The project structure of Analytics API is very similar to the Login service.

The main difference is that Analytics service is a pure API project, without any associated Views or in other words does not follow the .NET MVC pattern. There are no web pages deployed, only an HTTP API that supports GET, POST, PUT operations, etc.

That being said, certain information about the project structure is omitted, to keep the documentation from bloating. You can read more about ASP.NET Core in the Login service documentation.

Connecting To Identity¶

To ensure users who request data from AnalyticsAPI are authenticated and authorized, AnalyticsAPI delegates Bearer tokens to the LoginService for verification.

This connection is specified at the Startup class. More specifically:

JwtSecurityTokenHandler.DefaultInboundClaimTypeMap.Clear();

services.AddAuthentication(JwtBearerDefaults.AuthenticationScheme)

.AddJwtBearer(JwtBearerDefaults.AuthenticationScheme, options =>

{

options.Authority = IdentityServerUrl;

options.RequireHttpsMetadata = false;

options.SaveToken = true;

options.TokenValidationParameters = new TokenValidationParameters

{

ValidateAudience = false

};

});

The IdentityServerUrl derives from the appSettings.*.json.

Retrieving user data¶

Moreover, AnalyticsAPI communicates with Login to requester user-related data.

For instance, which products a user/organization owns, or user and organization information.

To do so, we have an AuthenticationDelegationHandler class that delegates the JWT Bearer token from the original user request

into the subsequent request to the Login service, therefore delegating authorization and making the request on behalf of the user.

Thus, communication is achieved in an HTTP manner (Request/Response).

Azure Blob Storage¶

Azure Blob Storage serves as our cloud storage system. Specifically, we utilize Azure Blob Containers to save the user analytics on the cloud, to be able to load them on the analytics platform at a later stage. The users’ analytics are uploaded at the end of each operation to the specified container in the form of blobs.

We mainly utilize Block Blobs for the analytics data and Append Blobs for the analytics meta data (i.e. date, average score, time etc.).

Note

Analytics meta data are generated automatically during the Upload request and stored in a “meta-data” folder, in the user’s container path. There is one meta data Append Blob per ORamaVR operation.

Storing Data in Azure Containers¶

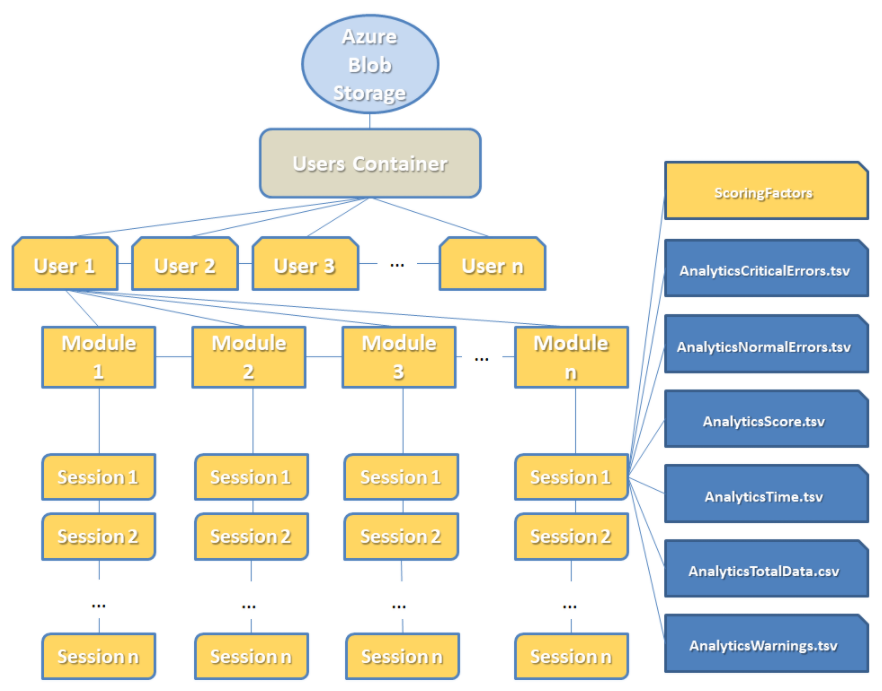

The main hierarchy of the data we store in Azure Blob Storage system is visible in the image below.

User related data available on our platform consist of score and performance metrics based on our modules.

Note

Data is stored per user session.

Inside Users container the following structure exists:

User Folders: One folder per user. Each of these folders contains all necessary files of their respective user progress.

Module Folders: Each user folder contains one or more modules folder. Module folders are named after respective module names. Module folders are generated when user runs a module for the first time.

Sessions Folders: These folders are contained inside their respective module folder. Each of the session folders represents a single user session of the module. A session folder is created when the user finishes a complete playthrough of the specific module. Generally, we store the following data for each user:

Number of critical errors in each module session and the name of the action, where they occurred.

Number of non-critical (or normal) errors in each module session and the name of the action, where they occurred.

The score for each action in each module session.

The time that the user needed for each action in each module session, measured in seconds.

The total data (all errors, critical errors, warnings, final score) for each module session.

Upload Post Request & Azure Blob System¶

The parameters of the Upload Post Request and their purposes for the Azure Blob Storage System are the following:

Parameter |

Purpose |

|---|---|

Username |

The username of the current user, specifying the folder name in the storage container root. |

Operation |

The name of the product/operation that the user played, indicating the folder name where the analytics will be uploaded in the user folder, for this product. |

files |

The files containing the analytics, which will be placed inside the operation folder (indicated by the operation parameter) in the appropriate folder for the specific session. |

SQL Database¶

Besides Azure blob storage, which is particularly useful for handling loads of data and multitude of files (blobs), we also have a separate database mainly for keeping track of records for how many sessions a user has played, or summaries for user sessions.

Keeping track of these kind of data in a database is very convienient, instead of navigating through Azure blobs and processing lots of data everytime to recover the last user session on a specific product.

Migrations to the database and table schemas are applied in a similar manner as the Login, EF Core, code-first.

So, whenever you start AnalyticsAPI, if there are any pending migrations, they will be applied.

Getting Ready for Development¶

1. Local SQL Database¶

As described in the section above, you need to create a local SQL database.

If you don’t know how, you can navigate to Login – Local SQL Database to see the steps outlined there.

The recommended name is AnalyticsDb but you can alter it to suite your needs.

2. Azure Storage¶

Unfortunately, at the time being we do not have an alternative solution for Azure Blob Storage. Therefore, even in development environment, you have to create a Storage Account at Azure.

Note

The recommended Storage Account Type that supports Blobs is General-Purpose V1 or General-Purpose V2 (V2 is not fully tested yet).

After creating the Storage Account, proceed to create a private Container with the name user-analytics.

Warning

Do not change the container name.

3. App Settings¶

Next, we need to configure the appSettings for the development environment.

Open the appSettings.Development.json file and you will see similar configurations as in the snippet below:

{

"AppSettings": {

"LogsStorageConnectionString": "",

"DataStorageConnectionString": ""

},

"IdentityServer": {

"IdentityUrl": ""

},

"ConnectionStrings": {

"AnalyticsDb": ""

},

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft": "Warning",

"Microsoft.Hosting.Lifetime": "Information"

}

}

}

Proceed to fill in the database connection string as in Login – Local SQL Database

in the ConnectionStrings:AnalyticsDb section.

Then, fill in the Login (IdentityServer) url in the IdentityServer:IdentityUrl section. For instance, https://localhost:44355.

Finally, in AppSettings, we set the Azure Storage Container’s connection strings.

Retrieve the Connection string from the Azure Storage Account and fill it in the

DataStorageConnectionStringsection.(Optional) The

LogsStorageConnectionStringis for storing logs.

4. Start the Service¶

By default, AnalyticsAPI runs on http://localhost:5002.

Unlike Login, and for testing purposes, you don’t need an TLS/SSL (HTTPS) Certificate.

Warning

A TLS/SSL certificate is necessary in production environments.

If you followed all steps so far, you are ready to hit the Start button.

Danger

Login has to be running in order to validate incoming JWT tokens!

Now you can test all API endpoints.

Note

The easiest way to populate your API with data is by uploading analytics from Unity!

Getting Ready for Production¶

1. App Settings¶

If you recall from the previous section, we modified the appSettings.Development.json file for working locally.

This time, we will have to modify in the same manner appSettings.json for use in the deployment.

As before, proceed to insert Database connection string in the ConnectionStrings:AnalyticsDb, this time with the connection string

from the live database in Azure.

The Azure blob storage connection strings remain as is.

Finally, make sure you point to the live Login service!